Multicast Overview Summary

Multicast Overview Summary

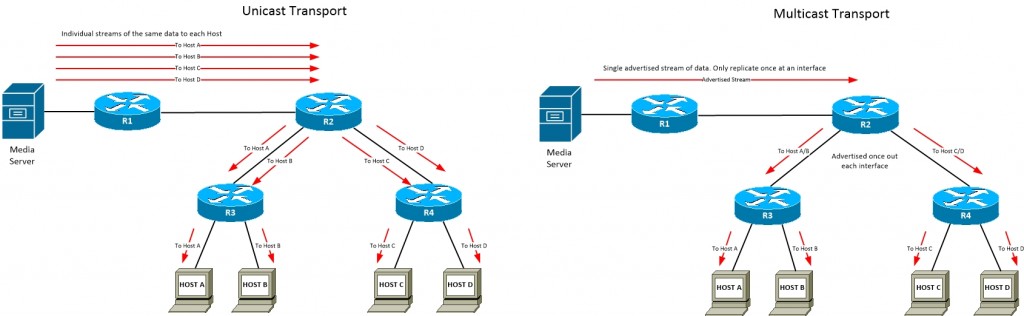

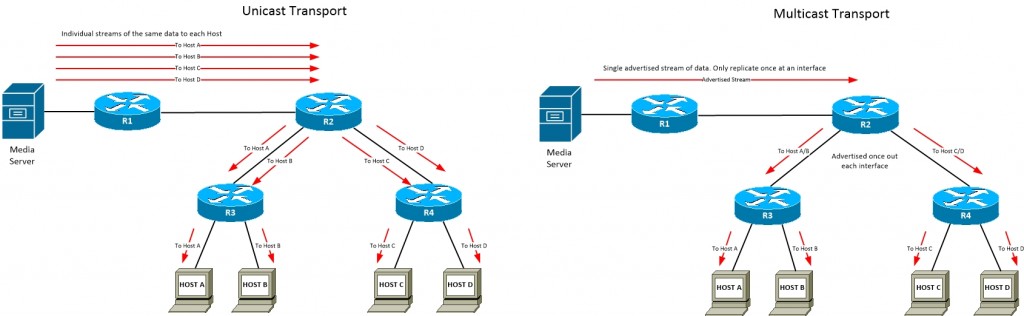

- Provides one-to-many communications over a a Layer 3 network

- Reduces the traffic load exponentially from a unicast/broadcast method of transporting data

- The multicast data stream is only replicated once per interface

- The source doesn’t need to know who the receivers are

Figure 1 – Multicast Overview

- Consists of 3 components

- Group Address

- Control Plane

- Multicast routing protocols (e.g. PIM)

- Builds Multicast Tree

- Loop-free tree from sender to receiver

- Router to Router communication

- Who is sending traffic to what group

- Who is receiving traffic and for what group

- Data Plane

Multicast Addressing

Multicast Address Ranges

- Multicast sits in the Class D address space

- 224.0.0.0/4

- 224.0.0.0 -239.255.255.255

- High order bits: 1110

| Address Range |

Description |

| 224.0.0.0/24(224.0.0.0 - 224.0.0.255) |

- Permanent multicast group

- Link local only, not routed

- Has a TTL of 1

|

| 224.0.1.0/24(224.0.1.0 - 224.0.1.255) |

- Permanent multicast group

- Routed address space

- e.g. AutoRP 224.0.1.39

|

| 232.0.0.0/8(232.0.0.0 - 232.255.255.255) |

- Source Specific Multicast (SSM)

|

| 233.0.0.0/8(233.0.0.0 - 233.255.255.255) |

- GLOP address space

- Assigns 256 multicast addresses to an enterprise that has a registered ASN

- Address maps to the ASN number

|

| 239.0.0.0/8(239.0.0.0 - 239.255.255.255) |

- Private Multicast address space

- Equivalent to RFC1918 private unicast address space

|

[_/su_spoiler]

Reserved Multicast Addresses

Reserved Multicast Addresses

| Address |

Description |

| 224.0.0.1 |

All multicast hosts on same segment |

| 224.0.0.2 |

All multicast routers on same segment |

| 224.0.0.4 |

Distance Vector Multicast Routing Protocol (DVMRP) for multicast routers |

| 224.0.0.5 |

OSPF All Routers |

| 224.0.0.6 |

OSPF Designated Routers |

| 224.0.0.9 |

RIPv2 Routers |

| 224.0.0.10 |

EIGRP Routers |

| 224.0.0.13 |

PIMv2 Routers |

| 224.0.0.18 |

VRRP |

| 224.0.0.22 |

IGMPv3 |

| 224.0.0.102 |

HSRP |

| 224.0.1.39 |

Cisco Auto-RP-Announce |

| 224.0.1.40 |

Cisco Auto-RP-Discovery |

[_/su_spoiler]

Multicast Address Mapping

Multicast Address Mapping

- Mapping Multicast addresses to MAC addresses

- Uses a reserved MAC address range of:

- 01-00-5E-00-00-00 to 01-00-5E-FF-FF-FF

- Due to limitations of space, 5 bits from the multicast address don't get converted to the MAC

- Can have up to 32 Multicast addresses to a single MAC address

- Process:

- Convert multicast IP address to binary

- The low-order 24th bit set to 0

- Replace the low-order 24 bits of the reserved MAC address with the low-order bits of the IP address

- Convert the low-order bits to hex

- Add to 01-00-5E MAC address space

- Example:

- Convert 224.155.233.10 to MAC address

- Convert 224.155.233.10 to binary

-

| Octet |

1 |

2 |

3 |

4 |

| IP |

224 |

155 |

233 |

10 |

| Bit |

1 |

1 |

1 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

0 |

1 |

1 |

0 |

1 |

1 |

1 |

1 |

1 |

0 |

1 |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

1 |

0 |

1 |

0 |

| Count |

32 |

31 |

30 |

29 |

28 |

27 |

26 |

25 |

24 |

23 |

22 |

21 |

20 |

19 |

18 |

17 |

16 |

15 |

14 |

13 |

12 |

11 |

10 |

9 |

8 |

7 |

6 |

5 |

4 |

3 |

2 |

1 |

- The low-order 24th bit set to 0

-

| Octet |

1 |

2 |

3 |

4 |

| IP |

224 |

155 |

233 |

10 |

| Bit |

1 |

1 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

1 |

0 |

1 |

1 |

1 |

1 |

1 |

0 |

1 |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

1 |

0 |

1 |

0 |

| Count |

32 |

31 |

30 |

29 |

28 |

27 |

26 |

25 |

24 |

23 |

22 |

21 |

20 |

19 |

18 |

17 |

16 |

15 |

14 |

13 |

12 |

11 |

10 |

9 |

8 |

7 |

6 |

5 |

4 |

3 |

2 |

1 |

- Replace the low-order 24 bits of the MAC address with the 24 low-order bits of the IP address and convert to hex

-

| Octet |

2 |

3 |

4 |

| Hex |

1 |

B |

E |

9 |

0 |

A |

| Bit |

0 |

0 |

0 |

1 |

1 |

0 |

1 |

1 |

1 |

1 |

1 |

0 |

1 |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

1 |

0 |

1 |

0 |

| Count |

24 |

23 |

22 |

21 |

20 |

19 |

18 |

17 |

16 |

15 |

14 |

13 |

12 |

11 |

10 |

9 |

8 |

7 |

6 |

5 |

4 |

3 |

2 |

1 |

- Add to 01-00-5E

- 01-00-5E-1B-E9-0A

- 01-00-5E-1B-E9-0A also accounts for the following IP Multicast addresses:

- 224.27.233.10

- 224.155.233.10

- 225.27.233.10

- 225.155.233.10

- 226.27.233.10

- 226.155.233.10

- 227.27.233.10

- 227.155.233.10

- .........

- 239.27.233.10

- 239.155.233.10

[_/su_spoiler]

Internet Group Management Protocol (IGMP) Overview

Internet Group Management Protocol (IGMP) Overview

- Communication between the client and the router

- Informs router that a host wants to receive or stop receiving multicast traffic for a specific group

- Controls what multicast feeds the router will forward to the segment

- Enabled automatically with PIM

- IGMP packets aren’t forwarded by routers, only sent on local LAN

IGMPv1

- Legacy version

- Replaced by IGMP v2

- Only supports group specific joins

Messages: Membership Query

Membership Query

- Sent to 224.0.0.1 by Multicast router

- Discovers group members on a segment

[___/su_spoiler]

Messages: Membership Report

Membership Report

- Called "IGMP Join message"

- Sent by hosts to a group address the hosts wants to join

[___/su_spoiler]

[__/su_spoiler]

Packet Format

| 0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

23 |

24 |

25 |

26 |

27 |

28 |

29 |

30 |

31 |

| Version |

Type |

Unused |

IGMP Checksum |

| Group Address |

| Field |

Size (bits) |

Values |

| Version |

4 |

Set to 1 |

| Type |

4 |

1 - Host Membership Query

2 - Host Membership Report

3 - DVMRP (Distance Vector Multicast Routing Protocol) |

| Unused |

8 |

Set to 0 |

| IGMP Checksum |

16 |

Checksum of packet |

| Group Address |

32 |

Query messages - Set to 0.0.0.0

Report messages - Set to Group address |

[__/su_spoiler]

[_/su_spoiler]

IGMPv2

- Default version when enabled on interface

- Backwards compatible with IGMPv1

- Destination address 224.0.0.2

- Only supports group specific joins

- Provides the following additions to IGMPv1

- Explicit Leave mechanism

- Querier Election process

- Selects the preferred router to send Query messages when there is more than one router on the subnet

- Group Specific Queries

- Query specific group rather than all all multicast hosts

- Timers

- Speeds up query and response time-outs

Packet Format

- Same packet format as IGMPv1 for backward compatibility

| 0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

23 |

24 |

25 |

26 |

27 |

28 |

29 |

30 |

31 |

| Version |

Type |

Unused |

IGMP Checksum |

| Group Address |

| Field |

Size (bits) |

Values |

| Version |

4 |

Set to 1 |

| Type |

4 |

1 - Host Membership Query

2 - Host Membership Report

3 - DVMRP (Distance Vector Multicast Routing Protocol) |

| Unused |

8 |

Set to 0 |

| IGMP Checksum |

16 |

Checksum of packet |

| Group Address |

32 |

Query messages - Set to 0.0.0.0

Report messages - Set to Group address |

[__/su_spoiler]

Type Field

- 0x11 - Membership Query

- Equivalent to IGMPv1 Host Membership Query

- Sent by MCast routers to discover group members on a segment

- 0x12 - Membership Report

- Equivalent to IGMPv1 Host Membership Report

- Used by IGMPv2 hosts for backward compatibility with IGMPv1

- Sent by Hosts to router

- 0x13 - DVMRP

- 0x14 - PIMv1

- 0x15 - Cisco Trace Messages

- 0x16 - Version 2 Membership Report

- Sent by Host to router

- Informs router at least one group member is present on segment

- 0x17 - Leave Group

- Sent by Host to router

- Informs router it wants to leave the group

- 0x1E - Multicast Traceroute Response

- 0x1F - Multicast Traceroute

[__/su_spoiler]

Maximum Response Time

- Uses unused field

- Set only with Query Messages

- Set in units of 1/10th second

- Default is 100 = 10 seconds

- Value range from 1 to 255

[__/su_spoiler]

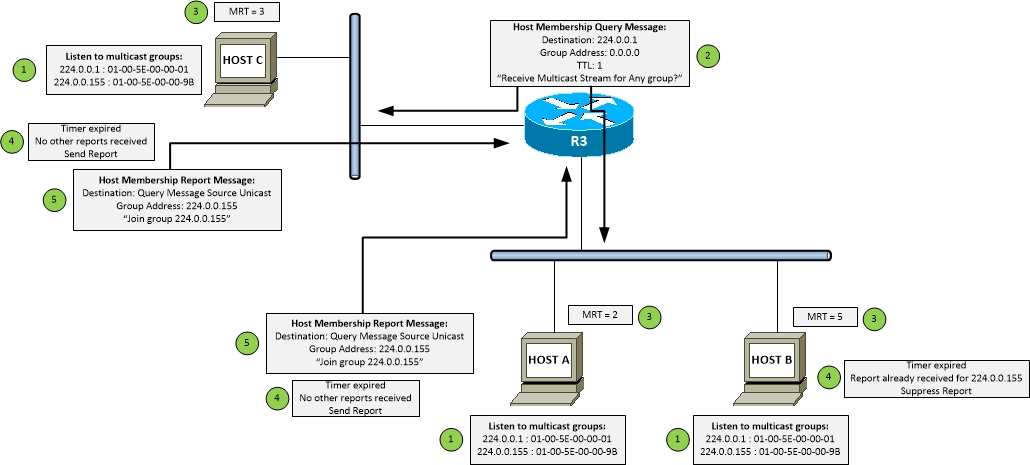

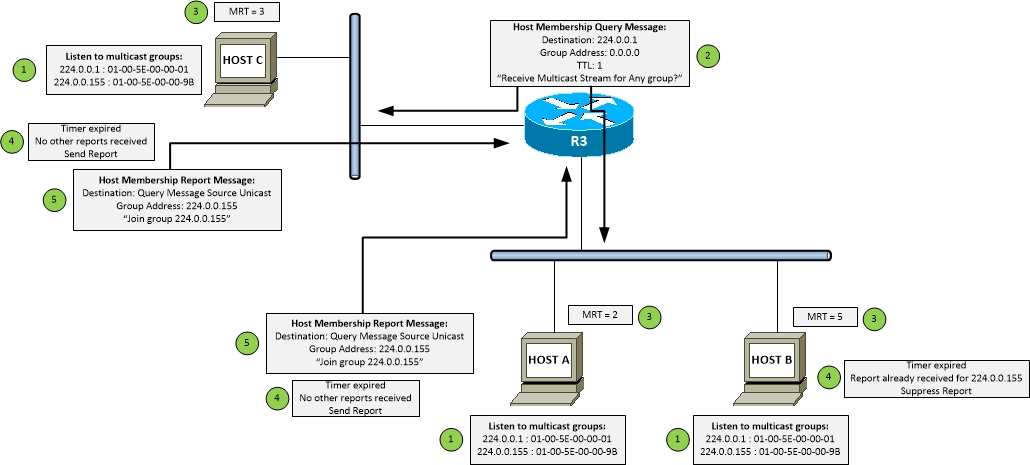

Membership Query/Report Process

Membership Query/Report Process

- Query:

- Determines if a multicast group member is on an interface

- Sent every 60 seconds by default

- Sent to 224.0.0.1 / 01-00-5E-00-00-01

- Report:

- Determines which multicast groups the host wants to receive traffic for

- Response to Membership Query packets and to signal that a host wants a specific feed

- Report Suppression:

- If there are multiple hosts wanting to receive the same feed, and they all send a report this can waste resources as a router only needs a single Report to send the feed to that segment

- Report Suppression is a method so only a single host on the same segment sends a Membership Report back to the router

- It uses the Maximum Report Time (MRT) field

Figure 2 - IGMPv2 Membership Query/Report process

- Process:

- 1. Host(s) wants to join Multicast group 224.0.0.155. Starts listening for messages for the joined group and the all nodes group (224.0.0.1), which the queries will be sent to.

- 2. R3 periodically sends out Membership Query messages, to see if any hosts want to receive any multicast groups

- 3. Hosts pick a random value between 0 and the MRT Query Response Interval, and start a countdown timer from this value.

- 4. When timer expires, if the host hasn't already seen a Membership Report being sent back, it will send one. If it has already seen one, it will suppress sending one.

- 5. Host sends a Membership Report message back to the router informing what multicast groups the host would like to join

[__/su_spoiler]

Group-Specific Query and Leave Process

Group-Specific Query and Leave Process

- Group-Specific Query prevents router discontinuing to send packets when host leaves a group

- Sends Query to specific group to see if there are any other hosts on segment still wanting to receive stream

- Leave message reduces the leave latency (3 minutes in IGMPv1 to 3 seconds in IGMPv2)

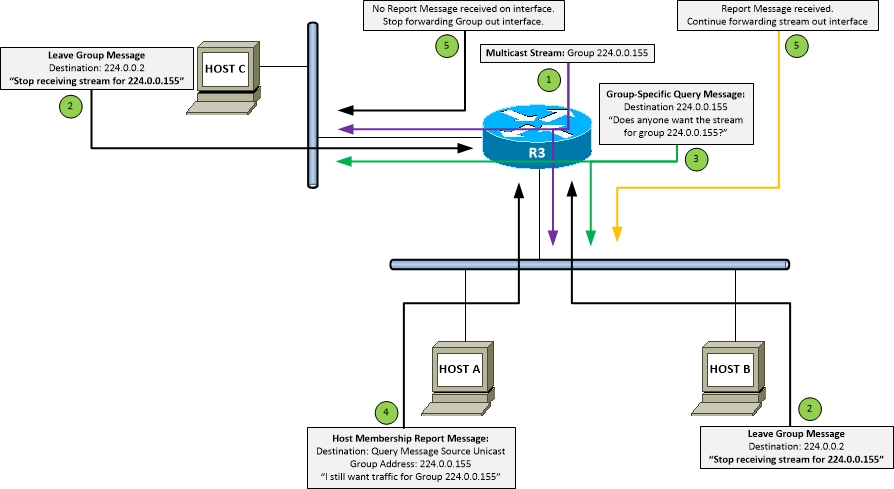

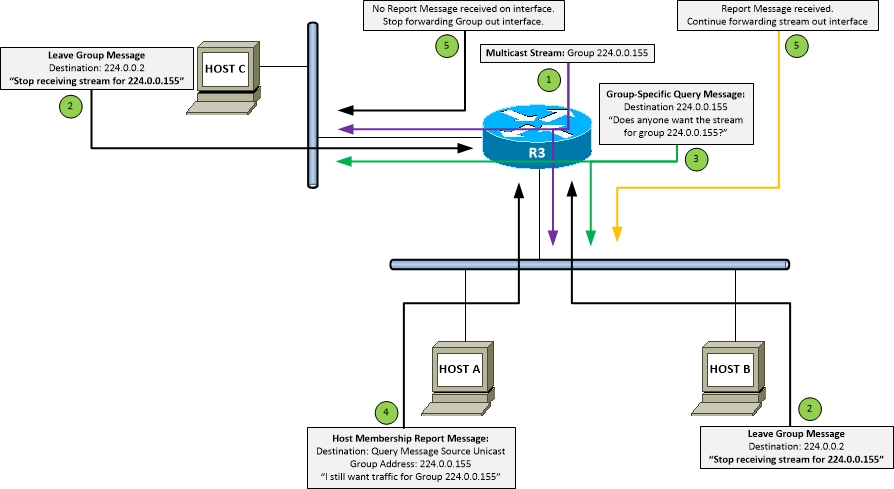

Figure 3 - Group-Specific Query/Leave process

- Process:

- 1. Multicast stream being sent out all interfaces where hosts are requesting stream

- 2. Hosts B and C send a Leave Group Message for group 224.0.0.155 to All routers multicast address 224.0.0.2, saying they no longer want the multicast feed

- 3. R3 sends a Group-Specific Query Message out interface asking if anyone there is anyone else on the interface wanting the Group-Specific stream of 224.0.0.155

- 4. Host A sends a Membership Report Message saying that they would still like to receive the multicast stream for Group 224.0.0.155

- 5. If R3 didn't receive a reply from the interface after sending the Last Member Query Interval (usually 1 second) twice, it stops forwarding the stream out that interface. If a Membership report was received, R3 continues to forward the stream out that specific interface

[__/su_spoiler]

Querier Process

[__/su_spoiler]

Timers

| Timer Command |

Description |

Default Value |

Range |

| ip igmp query-interval <secs> |

Period between which Query Messages are sent by router |

60 |

1 - 18000 |

| ip igmp query-max-response-time <secs> |

Period by which hosts must respond to Query Messages sent by router |

10 |

0.1 - 25.5 |

| ip igmp querier-timeout <secs> |

Period by which if a Non-Querier router doesn't receive a Query Message,

it will start the election process again |

255 |

60 - 300 |

| ip igmp last-member-query-count <#> |

Maximum number of retries it will wait before declaring there are no morehosts requesting the multicast stream |

2 |

1 - 7 |

| ip igmp last-member-query-interval <secs> |

Maximum time it will wait between sending out-of-sequence Query Messageafter receiving a Leave Message on interface |

1 |

0.1 - 25.5 |

| Group Membership Interval |

Amount of time that must pass before the router decides there are no moremembers of a Group on a segment |

260 |

Not Configurable |

[__/su_spoiler]

[_/su_spoiler]

IGMPv3

- Destination address 224.0.0.2

- Support for Source Specific Multicast (SSM)

- Supports group specific joins

- (S,G) join

- IGMPv3 receiver must already know about the sender

- Membership Reports sent to 224.0.0.22

- Enabled at the interface level

- Command:

- (config-if)#ip igmp version 3

[_/su_spoiler]

Filtering IGMP

- Various methods can be used to filter IGMP

- IGMP ACL facing towards receiver to block access to Groups

- Applied on interface facing receivers

- Can uses standard or extended access lists

- Extended access lists can be used to filter IGMPv3 Reports

- Extended access list structure: permit ip <source IP> <source mask> <group IP> <group mask>

- Standard access list structure: permit <group IP> <group mask>

- Command:

- (config-if)#ip igmp access-group <acl>

- Using multicast boundary to filter both control plane and data plane traffic

- Applied on multicast boundary interface facing away from multicast domain

- Command:

- (config-if)#ip multicast boundary <acl> [in | out] [filter-autorp]

[_/su_spoiler]

Protocol Independent Multicast (PIM)

PIM Overview Summary

- Multicast routing protocol

- IP Protocol 103

- PIM Neighbor Discovery messages sent to 224.0.0.13

- PIMv2 is the default

- Needs to be enabled globally

- Command:

- (config)#ip multicast-routing [distributed] [vrf <vrf-name>]

- Doesn't include its own topology discovery mechanism

- Requires IGP to pass and populate routing information

- Controls how the multicast tree is built

- (*.Group) = Shared Tree or Rendezvous Point Tree (RPT)

- Any Source Multicast (ASM)

- (Source, Group) = Shortest Path Tree (SPT)

- Source Specific Multicast (SSM)

- 2 different modes of operation:

- Dense Mode (PIM-DM)

- Sparse Mode (PIM-SM)

- Cisco has hybrid implementation

- Sparse-Dense-Mode (PIM-SDM)

- To specifically request a multicast stream use IGMP join command on interface

- Command:

- (config-if)#ip igmp join-group <group address>

[_/su_spoiler]

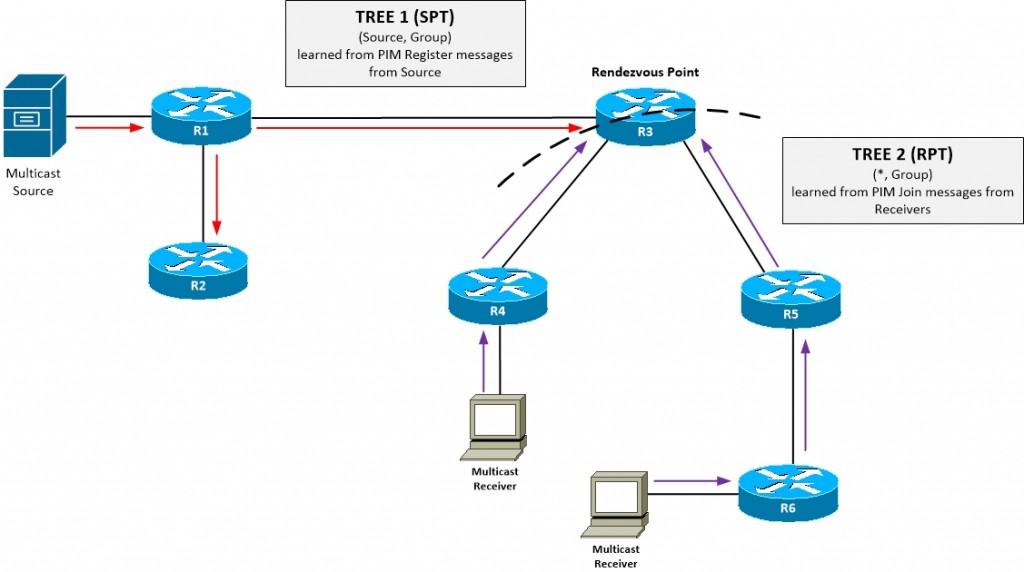

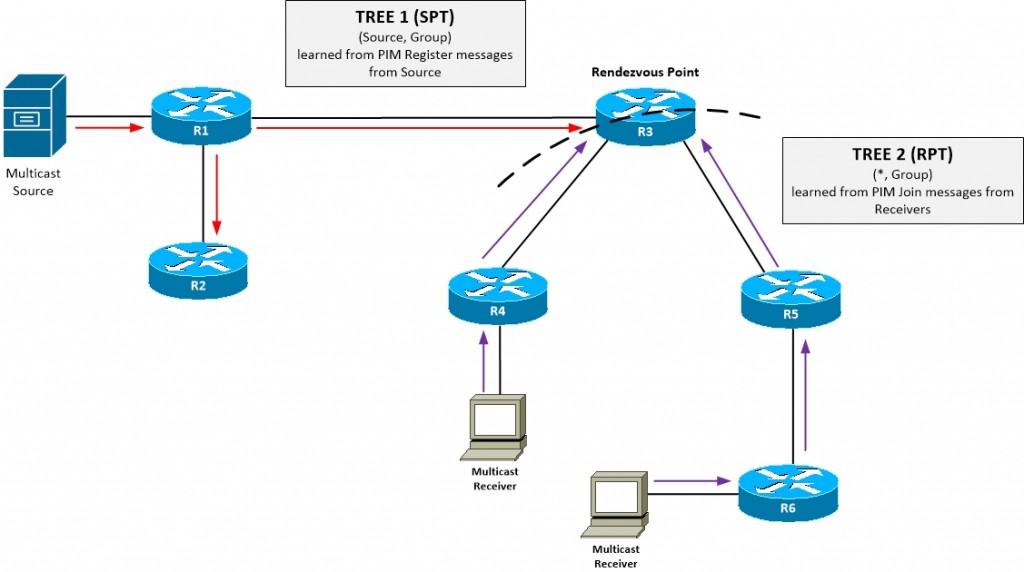

Rendezvous Point Tree (RPT) - Shared Tree

Rendezvous Point Tree (RPT) - Shared Tree

- Defines how traffic routed from source to client

- To build the tree start at the source of the stream and work back to destination of the stream

- Reverse of normal routing

- Uses shortest path from source to Rendezvous Point, then shortest path from RP to client

- Used in PIM-SM only

[__/su_spoiler]

Shortest Path Tree (SPT) - Source Tree

Shortest Path Tree (SPT) - Source Tree

- Defines how traffic routed from source to client

- To build the tree start at source of the stream and work back to destination of the stream

- Reverse of normal routing

- Uses shortest path from sender to receiver, possibly through a Rendesvous Point

- Used in PIM-DM and PIM-SM

- Performs a flood and prune mechanism

- Not valid to have interface IN and OUT for same (Source, Group)

- Verify command:

[__/su_spoiler]

[_/su_spoiler]

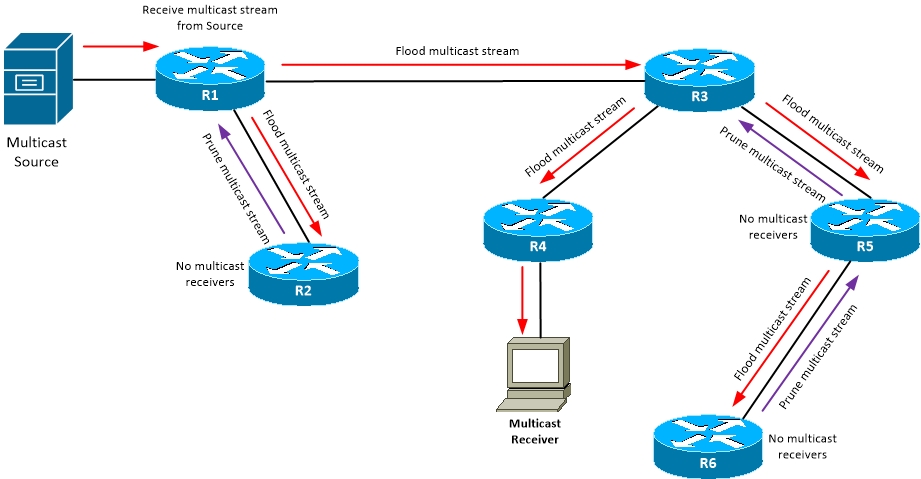

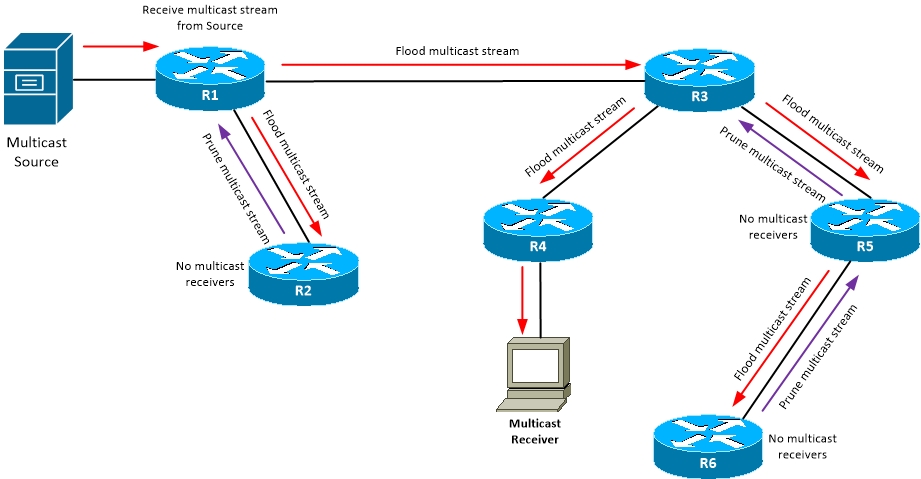

PIM Modes: Dense Mode (PIM-DM)

PIM Modes; Dense Mode (PIM-DM)

- RFC3973

- Legacy method

- All traffic flooded throughout multicast network

- Flood & Prune method

- Flood to everyone until receive a Prune message

- Implicit Join / Push Model

- One way adjacency mechanism between neighbors

- Needs to be checked by both neighbors individually that adjacencies are up (both sides of the link)

- Scaling limitations

- Only suitable for small multicast deployments`

- Enabled per-interface

- Command:

- (config-if)#ip pim dense-mode

- Once enabled, by default router becomes a receiver for group 224.0.1.40 (AutoRP group)

-

Rule 1:

- OIL of PIM-DM entry (*,G) reflects other PIM-DM neighbors or directly connected members of the group

-

Rule 2:

- Outgoing interfaces in PIM-DM entries (Source, Group) are not removed as result of Prune. They are marked as Prune/Dense and left in OIL

-

Rule 3:

- When a new PIM-DM neighbor is added to list of neighbors on an interface, the interface is reset to Forward/Dense state in all (Source, Group) OIL's

[__/su_spoiler]

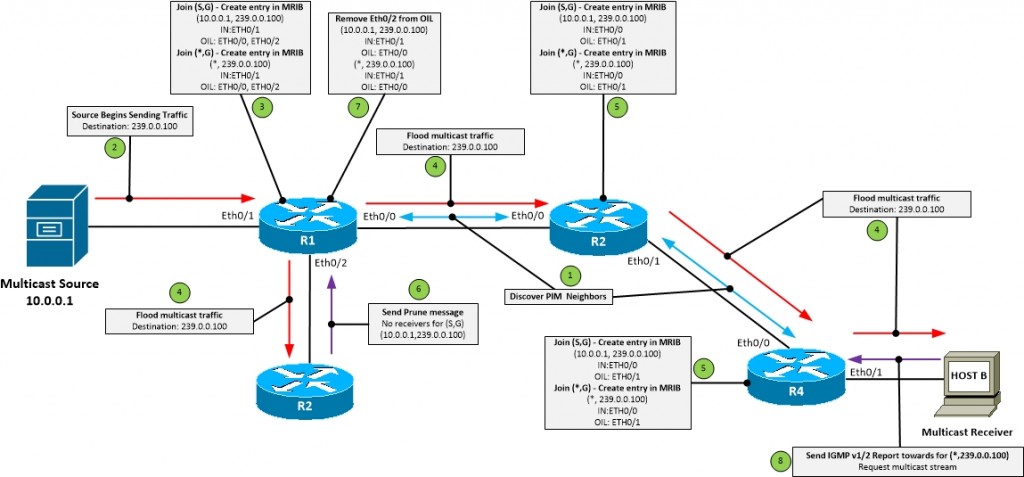

- Process:

- 1. Discover PIM neighbors

- 2. Source sends multicast stream for group 239.0.0.100

- 3. Insert (*,G) and (S,G) in MRIB.

- Incoming interface is attached to Source

- Outgoing Interface List (OIL) is all other PIM enabled interfaces

- 4. Multicast traffic flooded to OIL

- 5. Repeat Step 3 for next-hop router

- 6. Send Prune message

- Stop flooding traffic out interface or

- Tell upstream router multicast traffic for (Source, Group) no longer required

- 7. Update MRIB for (*,G) and (S,G) and remove interface from OIL that no longer requires multicast stream

[__/su_spoiler]

PIM-DM Messages: PRUNE

- Prune Message sets the Flag: P in the packet

- Prune on the following conditions:

- RPF (Reverse Path Forwarding) check fails

- There are no downstream receivers

- Downstream neighbors have already sent prune

- Once a prune has happened

- Traffic for (Source, Group) stops but (S, G) still remains in multicast table

[___/su_spoiler]

PIM-DM Messages: GRAFT

- Prune a (Source, Group) then receive an IGMP Report message

- Graft Message used as a PIM-DM Join Message

- Unprune (Source, Group) from upstream neighbor

- Speeds up convergence

- Doesn't wait for periodic flooding

[___/su_spoiler]

PIM-DM Messages: ASSERT

- Prune duplicate multicast streams

- Typically used when there are multiple multicast routers attached to a segment

- Triggered when (Source, Group) stream received on an interface already in the OIL

- Winner determined by:

- Lowest metric to Source of stream

- If metric equal, Highest IP address

[___/su_spoiler]

PIM-DM Messages: STATE REFRESH

PIM-DM Messages: STATE REFRESH

- Once traffic is pruned, it is re-flooded every 3 minutes

- The State Refresh is a keepalive for Prune state

- Originated by the root of the SPT (Shortest Path Tree)

- If downstream router agrees, traffic is not re-flooded

- Doesn't fix issue of (Source, Group) staying in multicast routing table

- Only applies on First Hop Router (FHR) and only on interface facing the source

- Command:

- (config-if)#ip pim sate-refresh origination-interval <1-100 seconds>

[___/su_spoiler]

[__/su_spoiler]

[_/su_spoiler]

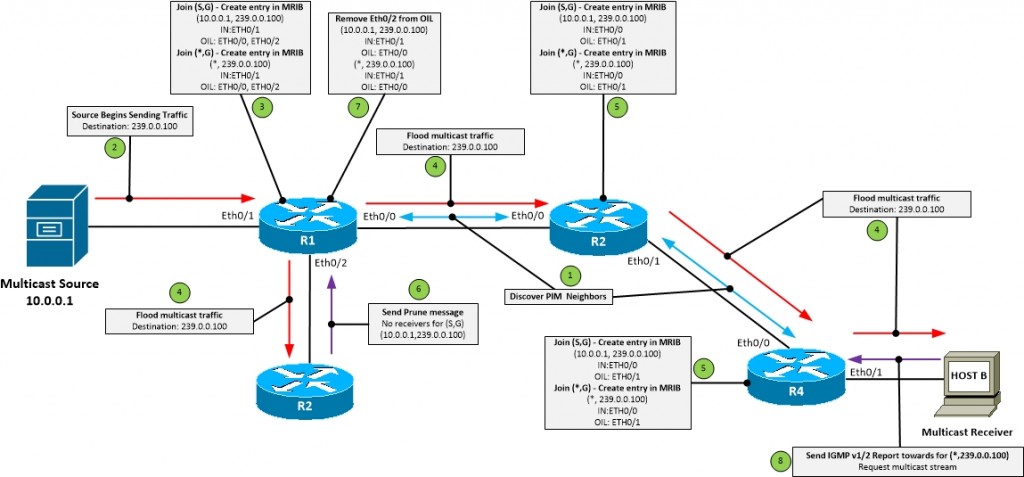

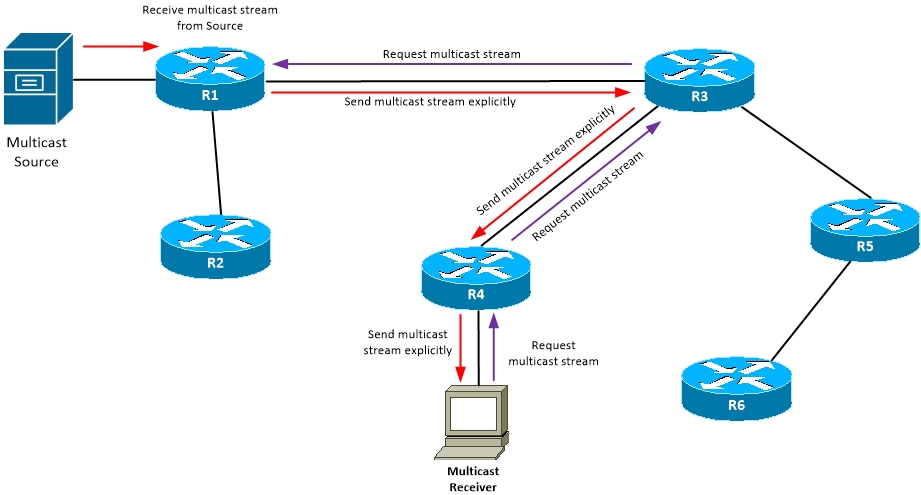

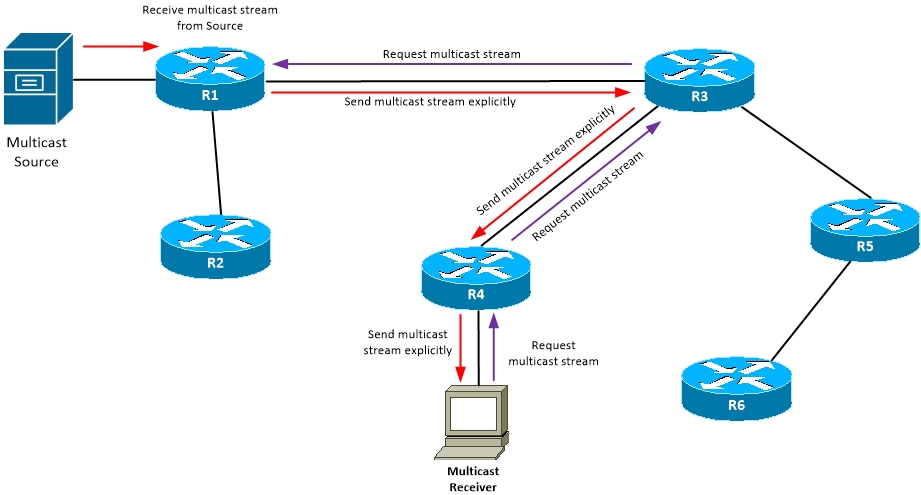

PIM Modes: Sparse Mode (PIM-SM)

PIM Sparse Mode (PIM-SM)

- RFC4601

- Only sends traffic to nodes that ask for it

- Explicit Join / Push Model

- Uses Rendezvous Points to process Join messages

- Should be at least one RP in PIM-SM

- Once Shortest Path Tree (SPT) tree from sender to receiver is built, traffic flows

- More scalable than PIM Dense Mode

- Uses Shared Trees (RPT - Rendezvous Point Trees) and Shortest Path Tree (SPT)

- PIM Sparse uses State Refresh to ensure feeds do not timeout

- Similar to PIM-DM

- (*,G) join sent to RP or up SPT to refresh the OIL

- Prune message can be used to speed up state information timeout if IGMP Leave is heard from end host

- Enabled per-interface

- Command:

- (config-if)#ip pim sparse-mode

-

Rule 1:

- PIM-SM entry (*, G) created as result of Explicit Join

-

Rule 2:

- Incoming interface of PIM-SM entry (*,G) always points up the Shared Tree (RPT) towards the RP

-

Rule 3:

- PIM-SM (S, G) entry created for the following:

- Received a (S, G) Join/Prune message

- On LHR when switchover to SPT

- Unexpected arrival of (S, G) when no (*, G) exists

- On RP when PIM Register message received

-

Rule 4:

- An interface is added to the OIL for (*, G) or (S, G) when

- (*, G) or (S, G) Join is received via this interface

- Directly connected group member on the interface exists

-

Rule 5:

- Interface removed from OIL of (*, G) or (S, G) when

- (*, G) or (S, G) Prune received

- where there is no directly connected member

- interface expiration timer expires (counts down to 0)

-

Rule 6:

- Expiration timer reset to 3 minutes when

- (*, G) or (S, G) Join received on interface

- IGMP Membership Report received from directly connected member on interface

-

Rule 7:

- Routers will send (S, G) RP-bit Prune up Shared Tree (RPT) when RPF neighbor for (S, G) entry is different from the RPF neighbor of the (*, G) entry

-

Rule 8:

- The RPF interface (incoming interface) of (S, G) is calculated using IP address of Source except when the RP-bit set, then IP address of RP used

[__/su_spoiler]

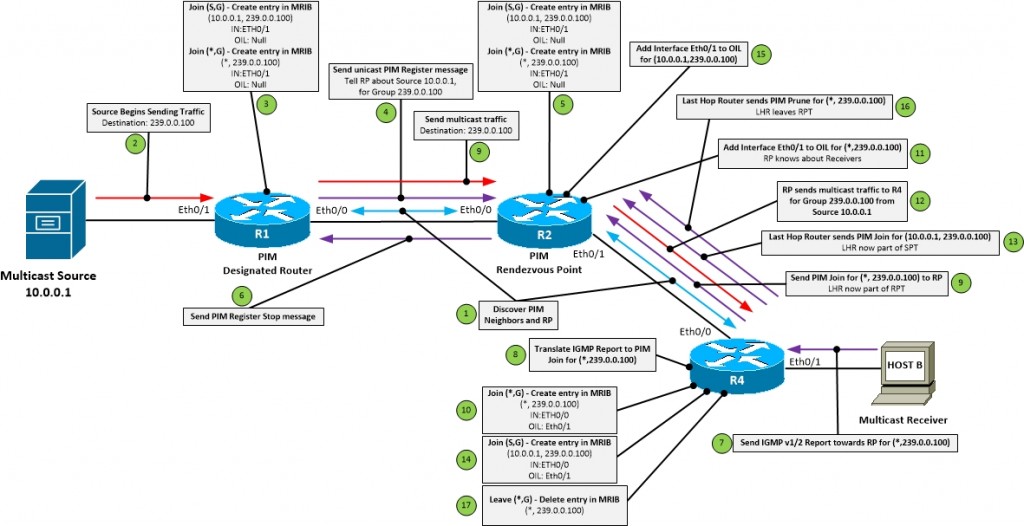

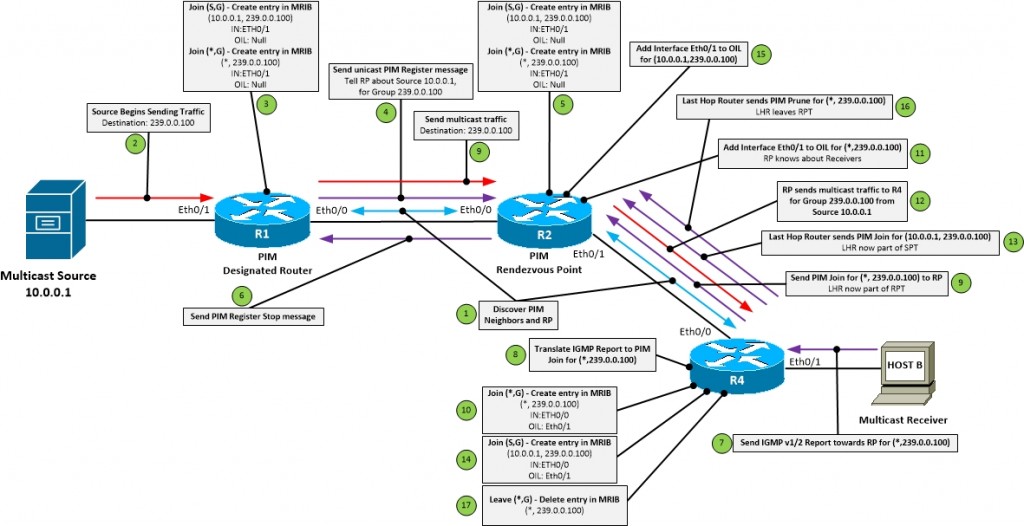

- Process:

- 1. Discover PIM neighbors and elect PIM Designated Router (DR)

- 2. Source begins sending traffic

- 3. Create entry in PIM DR MRIB for (*,G) and (S,G)

- 4. Tell Rendezvous Point about Sources by sending unicast PIM Register message

- 5. Create entry in RP for Shared Tree (*,G) and Source Tree (S,G). Set the Interface as Null as RP doesn't yet know about any Receivers

- 6. RP acknowledges DR Register message with Register Stop message

- 7. Multicast Receiver sends IGMP Report for (*,G) to receive multicast feed. Receiver doesn't yet know about Source

- 8. Tell Rendezvous Point about Receivers by IGMP Querier translating Report message into PIM Join message for (*,G)

- 9. Send PIM Join for (*, G) toward RP. Last hop Router now joined Shared Tree (RPT)

- 10. Create entry in R4 MRIB for (*,G) with IN towards RP and OIL towards receiver

- 11. RP adds interface towards R4 (Eth0/1) to OIL for (*,G). RP now knows about Receivers

- 12. RP sends Multicast traffic to R4 for Group 239.0.0.100 from Source 10.0.0.1

- 13. R4 sends PIM Join to RP for (S,G). R4 now part of Source Tree (SPT)

- 14. R4 creates entry in MRIB for (S,G)

- 15. RP adds interface towards R4 (Eth0/1) to OIL for (S,G).

- 16. R4 sends PIM Prune message to RP for (*,G)

- 17. F4 deletes entry in MRIB for (*,G). R4 has now left the Shareed Tree (RPT)

[__/su_spoiler]

PIM-SM Messages: REGISTER

PIM-SM Messages: REGISTER

- As root of all shared trees, RP must know about all sources

- When First Hop Router connected to source hears traffic, unicast register message sent to RP

- If multiple FHR then only the DR registers

- If RP accepts message

- its acknowledged with a Register Stop message

- Inserts (Source,Group) into table

- At this point only DR and RP know (Source,Group)

- RP can be configured manually to only accept Register messages for specific hosts

- Command (on RP):

- (config)#ip pim accept-register list <extended acl>

- Extended ACL needs to be in the format of:

- [permit | deny] ip <source address> <source mask> <group address> <group mask>

[___/su_spoiler]

PIM-SM Messages: JOIN

- As root of all shared trees, RP must know about all receivers

- When Last Hop Router receives IGMP Report message

- PIM Join is generated up the reverse path tree towards the RP

- All routers in the reverse path install the (*,G) and forward the join hop-by-hop to the RP

- At this point the RP and all downstream devices towards the receiver know the (*,G)

[___/su_spoiler]

[__/su_spoiler]

Rendezvous Point (RP)

- Used for Multicast control plane traffic

- Used as a reference point for root of Shared Tree (RPT)

- RP is used to merge two trees together

- Source to RP

- Learns about multicast sources with PIM Register messages

- (Source, Group)

- Receiver to RP

- Learns about receivers using PIM Join messages

- Multicast Receiver sends IGMP Report message for application flow

- Report message converted into PIM Join message for (*, Group)

- Tells RP to add an interface to the OIL for (*,Group)

- Merging the Trees

- Once the RP knows about the Source and the receiver

- RP sends a PIM Join up reverse path to the source

- All routers up the reverse path from the RP to the source install (*,G) with OIL pointing towards RP

- Once (Source,Group) begins to flow the tree is built end-to-end through the RP

- All multicast devices have to agree on the RP address

- Per-group basis

- Register and Join messages are rejected for invalid RPs

- RP address can be allocated either:

- Manually

- Required to be configured on all devices in the path

- Command:

- (config)#ip pim rp-address <RP address>

- Dynamically using:

- Auto-RP (Cisco proprietary)

- Preferred over statically configured RPs

- BSR (Open standard built in PIMv2)

- SPT from receiver to source may not be the same as the Shared Tree (RPT)

- Would be more optimal to use SPT than RPT if SPT is closer to Source than the RP

- Joins SPT to Source with (Source, Group) Join

- Leaves the RPT by sending (*,Group) Prune to RP

- Can be modified manually

- Command:

- (config)#ip pim spt-threshold

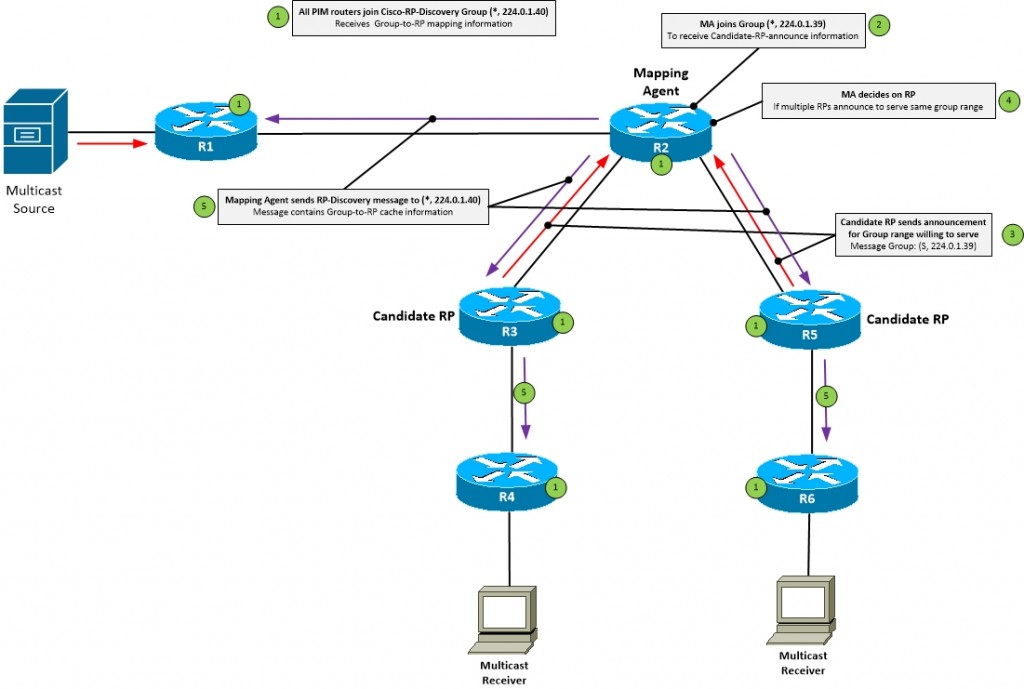

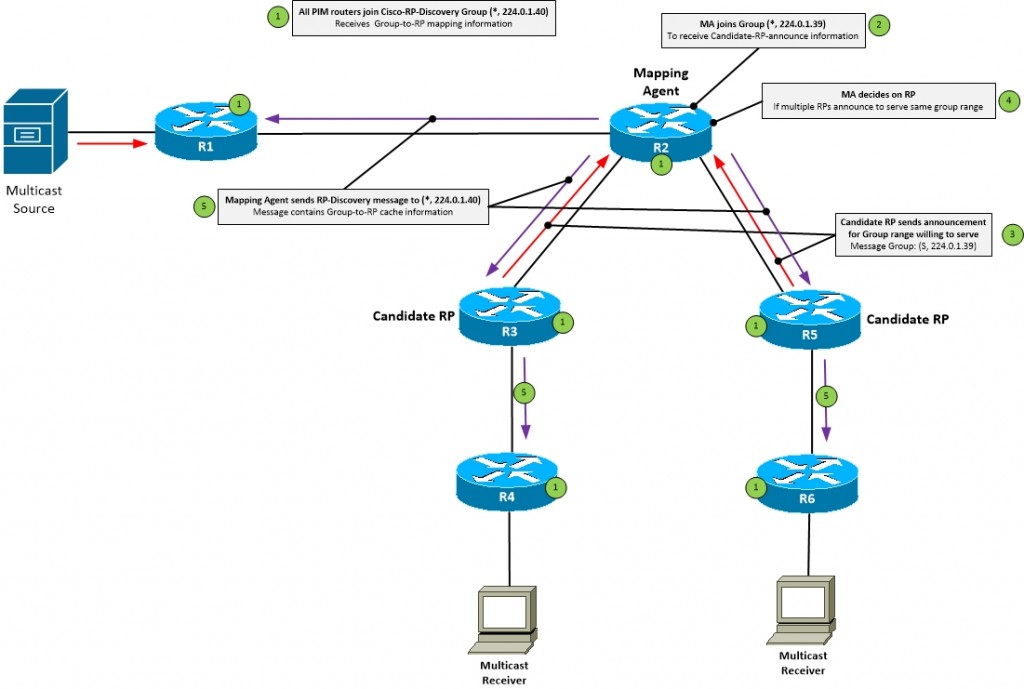

AutoRP

- Cisco Proprietary

- Legacy protocol

- Transport UDP/496

- Used by PIM-SM as dynamic advertisement of RPs

- Candidate RP

- Devices that can be the RP for specified group range

- Can have more than one Candidate RP

- Command:

- (config)#ip pim send-rp-announce <source IF> scope <TTL> group-list <acl for group-range>

- Mapping Agent

- Decides RP between candidates

- Tells other PIM enabled routers about Group-to-RP mapping information

- Command:

- (config)#ip pim send-rp-discovery <source IF> scope <TTL>

[____/su_spoiler]

- 1. All PIM enabled routers join the well-known Cisco-RP-Discovery multicast group (*, 224.0.1.40)

- This is so devices can receive Group to RP mapping information

- 2. Mapping Agent (MA) joins the well-known Cisco-RP-Announce multicast group (*, 224.0.1.39)

- 3. Candidate RP sends RP-announce message to (S, 224.0.1.39) for group range the RP is willing to serve to the Mapping Agent (MA)

- RP-Announce message includes hold-time for how long that RP is valid for that group

- 4. Mapping Agent decides on RP if multiple candidate RP serve the same group range and updates local Group-to-RP cache

- Highest RP IP wins tie-breaker

- 5.Mapping Agent multicast the contents of the Group-to-RP mapping cache using RP-Discovery messages to all other PIM enabled routers

- RP-Discovery message include hold-time for how long the Group-to-RP mapping information is valid

- Messages should be filtered at the edge using Multicast Boundary

- Command:

- (config-if)#ip multicast boundary filter-autorp <ACL of groups to filter>

[____/su_spoiler]

- Can have multiple RP Candidates to provide load distribution

- Split group ranges with ACL on static RP announce filter

- Command:

- (config)#ip pim rp-announce-filter rp-list <std-acl> group-list <std-acl>

- Recursive issue where

- Unable to join Auto-RP groups without knowing who the RP is

- Unable know who RP is without joining the AutoRP groups

- Can be resolved with one of the following methods

- Statically assign RP

- Command:

- (config)#ip pim send-rp-announce <source if> scope <TTL> <acl of group-range>

- Use PIM Sparse-Dense mode

- Auto-RP listener feature

- Sets the Mapping Agent (*, 224.0.1.39) and Candidate RP (*, 224.0.1.40) groups to PIM-DM

- Command:

- (config)#ip pim autorp listener

[___/su_spoiler]

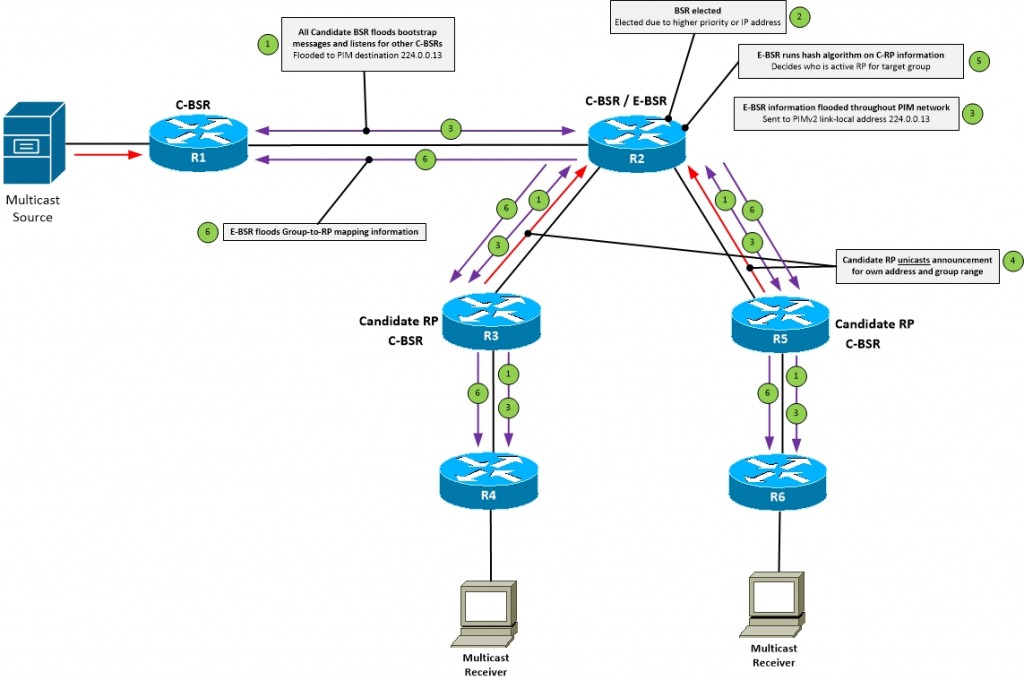

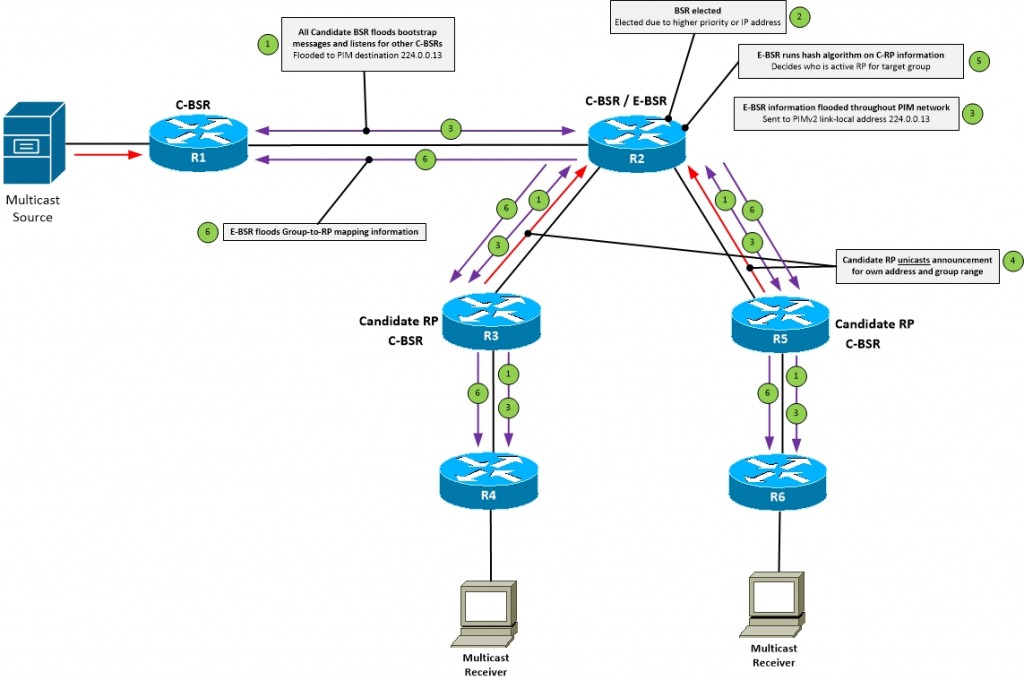

Bootsrap Router (BSR)

- RFC5059

- Open Standard of dynamic RP configuration

- Built into the PIMv2 specification

- From IOS version 11.3

- Doesn't use any PIM-DM groups to flood RP information

- Information flooded using PIM messages

- Candidate RP

- Same as Auto-RP Candidate RP

- Details which groups willing to serve

- Can not use negative group lists like Auto-RP

- Can have more than one Candidate RP

- Uses unicast PIM to advertise itself to the Bootstrap Router

- Command:

- (config)#ip pim rp-candidate <source IF> group-list <group-acl> priority <0-255>

- Lower priority value is preferred

- On Cisco default is 0

- Standard states should be max value of 192

- Bootstrap Router

- Listens for Candidate RP announcements

- Uses hop-by-hop flooding of BSR messages to advertise Group-to-RP mapping information

- Uses PIMv2 link-local address (224.0.0.13)

- BSR advertisements carried in PIMv2

- Command

- (config)#ip pim bsr-candidate <source IF> [hash-mask-length] [priority]

- Creates a group range to RP set mapping

- Details which Candidate RPs are willing to serve which groups

- Prefers higher priority value for BSR

- Default priority is 0

- Uses highest IP address as a tie-breaker

- BSR election is pre-empted

- Can implement RP load balancing using special hashing function

- Selects best RP from set that services same group list

[____/su_spoiler]

- 1. Every router configured as BSR floods bootstrap messages and listens for other BSR candidates

- Messages flooded to PIMv2 multicast address 224.0.0.13 on hop-by-hop basis

- 2. One Candidate BSR in PIM network becomes Elected BSR router

- Determined by higher priority or higher BSR IP address

- Candidate BSR gives up BSR role if receives better message

- If BSR fails then another will take over, providing redundancy

- 3. All routers in the PIM network learn about the Elected BSR including Candidate RPs through flooding every 60 seconds (default)

- BSR messages contain

- Unicast IP address of RP corresponding to the IP address of interface specified in bsr-candidate command

- RP hash mask length specified in bsr-candidate command

- BSR priority specified in bsr-candidate command. Default is 0.

- Candidate RP-set learned from all Candidate RPs via C-RP advertisement messages

- 4. Every Candidate RP unicasts own address and group mappings to E-BSR using advertisement messages

- 5. E-BSR runs hashing algorithm on all C-RPs in the RP-set who's advertised group matches the target group

- One with lowest hash value is selected as active RP for target group

- Tie-breaker is RP highest IP address

- 6. E-BSR floods Group-to-RP mapping information throughout PIM domain

- Should be manually filtered at the edge using BSR Border or administrative scoping based on TTL

- Command:

- (config-if)#ip pim bsr-border

[____/su_spoiler]

- BSR announce filter can permit/deny Candidate RP for specific groups

- Same as announce filter on Auto-RP

- Command:

- (config)#ip pim bsr-candidate <source IP> accept-rp-candidate <acl for group range>

[___/su_spoiler]

[__/su_spoiler]

Source Specific Multicast (SSM)

Source Specific Multicast

- Recommended Design if application can support it

- Uses a reserved address range

- 232.0.0.0/8

- Anything in this range will only have (S,G) not (*,G)

- Receiver knows the source before it wants to join stream

- Receiver uses IGMPv3 Report message for (S,G) join

- RP not needed to build the Shared Tree (RPT) - (*, G)

- Application already knows the Source

- RP not needed to build the control plane, reduces RP reliance

- Result is SSM uses only Source Trees (SPT) - (S,G)

- LHR send (S,G) PIM join up RPF towards source

- Result is each tree is SPT for (S,G)

- Groups 224.0.0.0/4 doesn't serve 232.0.0.0/8

- SSM groups are always checked first and never used to send a (*,G) join

- If receives a join for (*,G) with group in the 232 range PIM will drop it

- Anything outside of 232.0.0.0/8 range can use IGMPv1/2 (*,G) joins

- You don't send a Register message for a group in the SSM range

- Has to wait for a receiver to join the group before it can build the tree

- Enable multicast routing globally

- (config)#ip multicast-routing [distributed]

- Define global SSM group range

- (config)#ip pim ssm <default | range [group range]>

- PIM SSM default supports 232.0.0.0/8

- Enable PIM Sparse Mode at interface level

- (config-if)#ip pim sparse-mode

- Enable IGMPv3 on links to Receivers

- (config-if)#ip igmp version 3

- don't need to enable on any other link as will be auto-used

[___/su_spoiler]

[__/su_spoiler]

Bidirectional PIM (BiDir)

Bidirectional PIM (Bi-Dir)

- RFC 5015

- Scaling mechanism for multicast routing table

- PIM-SM doesn't scale well for many-to-many multicast applications if Source is both sender and receiver

- ASM Forms two trees

- SPT from source to RP - (S,G) MRIB entry

- RPT from RP to Receivers - (*,G) MRIB entry

- Bidirection PIM solves this by only allowing the RPT (*,G) at RP and not SPT (S,G)

- Reduces amount of mroute entries

- Only requires one entry for all sources sending to a common group

Bidir Designated Forwarder

- One DF elected per PIM segment

- Similar to PIM-DM: Assert

- Uses lowest metric to the RP

- Uses highest IP address as a tiebreaker

- Only DF can forward traffic towards RP

- All other interfaces in OIL are downstream facing

- Removes the need for RPF check

- All routers must agree on Bidir or loops can occur

[___/su_spoiler]

- 1. Define RP and group range as bidirectional

- Stops (S,G) being created for that range

- 2. Build a single (*,G) RPT towards RP

- Traffic flows upstream from Source to RP

- Traffic flows downstream from RP to Receivers

- 3. Removed PIM Register Process

- Implies that traffic from sources always flows to the RP

- 4. Uses Designated Forwarder (DF) for loop prevention

[___/su_spoiler]

- Enable PIM Bidir globally

- (config)#ip pim bidir-enable

- Configure RP as Bidirectional

- Statically

- (config)#ip pim rp-address <rp address> bidir

- Using Auto-RP

- (config)#ip pim send-rp-announce <source IF> scope <scope> bidir

- Using BSR

- (config)#ip pim rp-candidate <source IF> bidir

[___/su_spoiler]

[__/su_spoiler]

[_/su_spoiler]

PIM Modes: Sparse-Dense Mode (PIM-SDM)

PIM Sparse Dense Mode (PIM-SDM)

- Cisco proprietary

- Hybrid combination of PIM-DM and PIM-SM

- Use PIM-DM for Groups without RP

- e.g. Well-known multicast groups (*, 224.0.1.39) and (*, 224.0.1.40)

- Use PIM-SM for all others

- Any group that looses RP information will auto-fail to PIM-DM. E.g. through the use of Auto-RP

- Can be disabled manually

- Command:

- (config)#no ip dm-fallback

- Enabled per-interface

- Command:

- (config-if)#ip pim sparse-dense-mode

[_/su_spoiler]

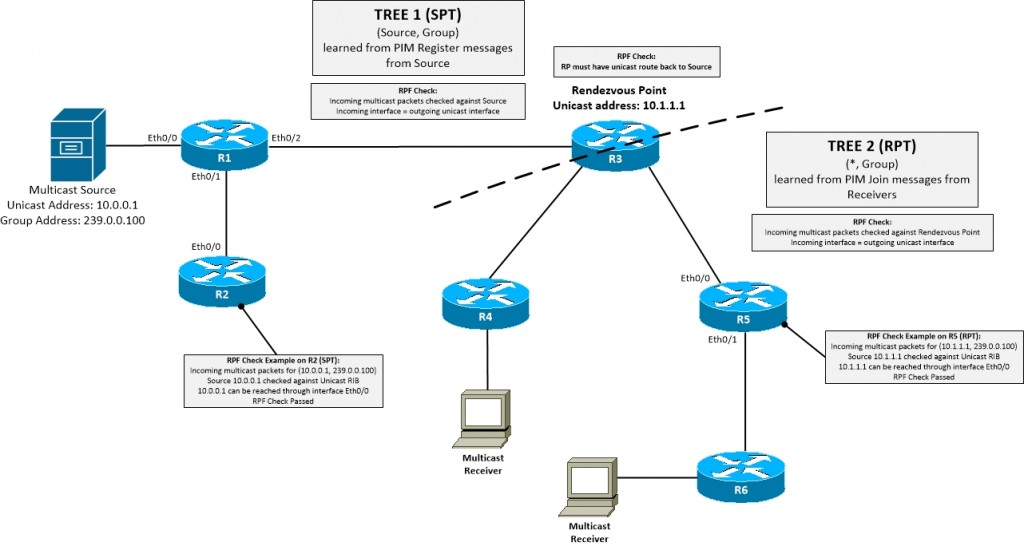

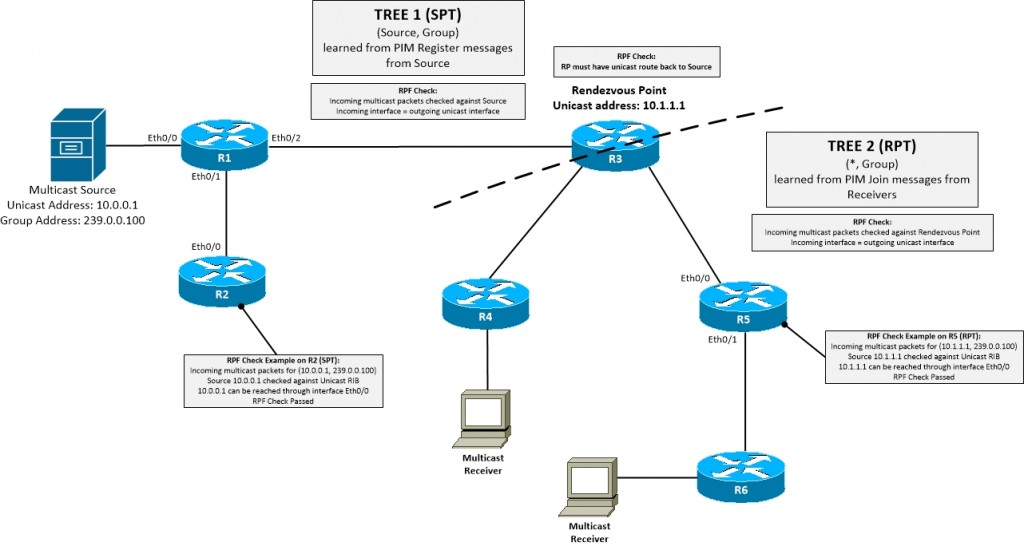

Reverse Path Forwarding (RPF)

Reverse Path Forwarding Check

- Provides a multicast loop-free path

- Before forwarding multicast packets, data-plane does RPF check

- Based on unicast routing table

- #show ip route multicast

- Database only used for RPF check not forwarding

- Uses split-horizon like behaviour

- Interface can not be incoming and OIL

- Controls how the tree MUST be built

- Can be modified

- Manually

- Command:

- (config)#ip mroute <src address> <netmask>

- Dynamically with Multicast BGP

- Multicast packets are received

- Data-plane looks at source IP and incoming interface

- CEF table is checked for unicast reverse path back to source (upstream interface)

- RPF Pass

- Incoming multicast interface must equal outgoing unicast interface (downstream interface)

- Packets passed from incoming interface to all OIL interfaces

- RPF Fail

- If incoming multicast interface != outgoing unicast interface (downstream interface)

- Packet dropped

- Shortest Path Tree (SPT) RPF Check

- Checked against source

- Used in (S,G) trees

- PIM Dense Mode

- PIM Sparse mode from RP to Source

- PIM Sparse mode from receiver to Source after SPT switchover

- PIM Source Specific Multicast (SSM)

- Shared Tree

- Performs RPF check against RP

- Register messages

- RP must have route back to source that is being registered

- (Source,Group) state can't be registered if no route

- Used in (*,G) trees

- PIM Sparse mode from receiver to RP before SPT switchover

[_/su_spoiler]

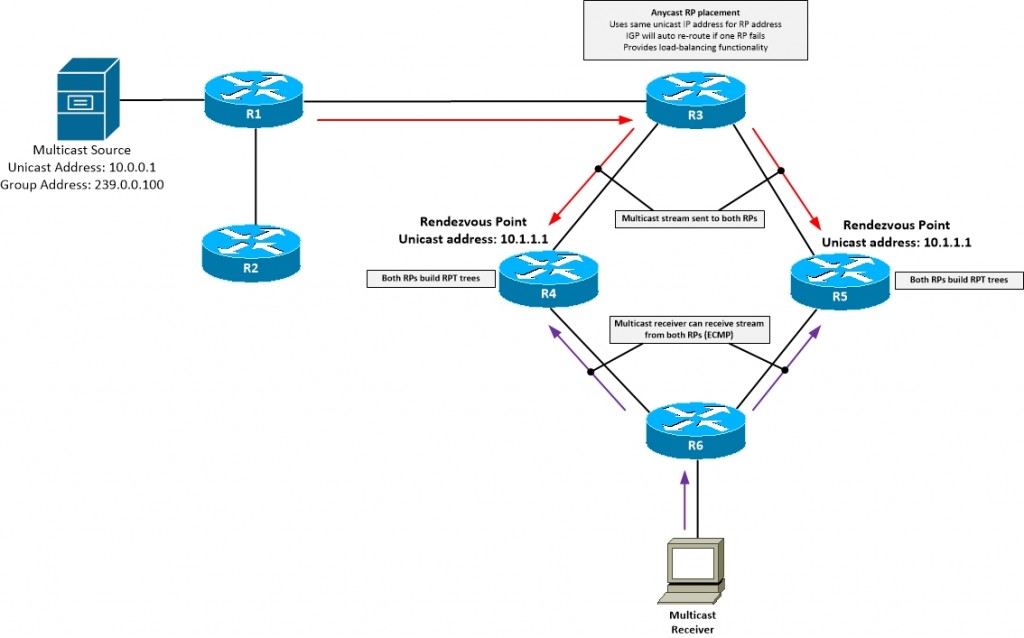

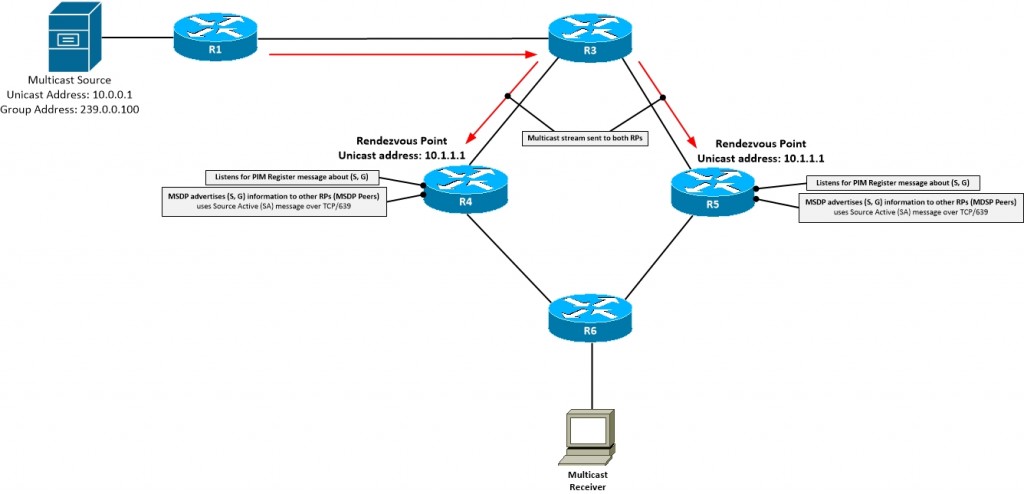

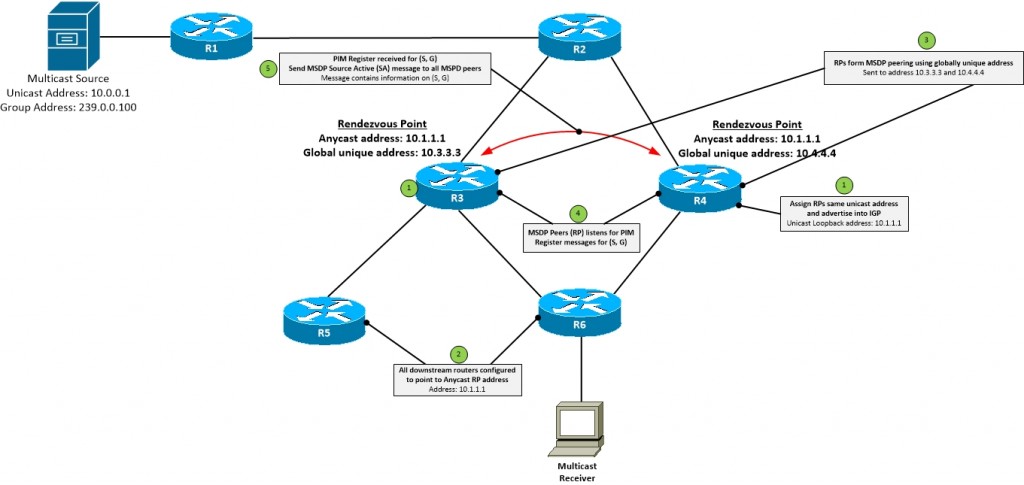

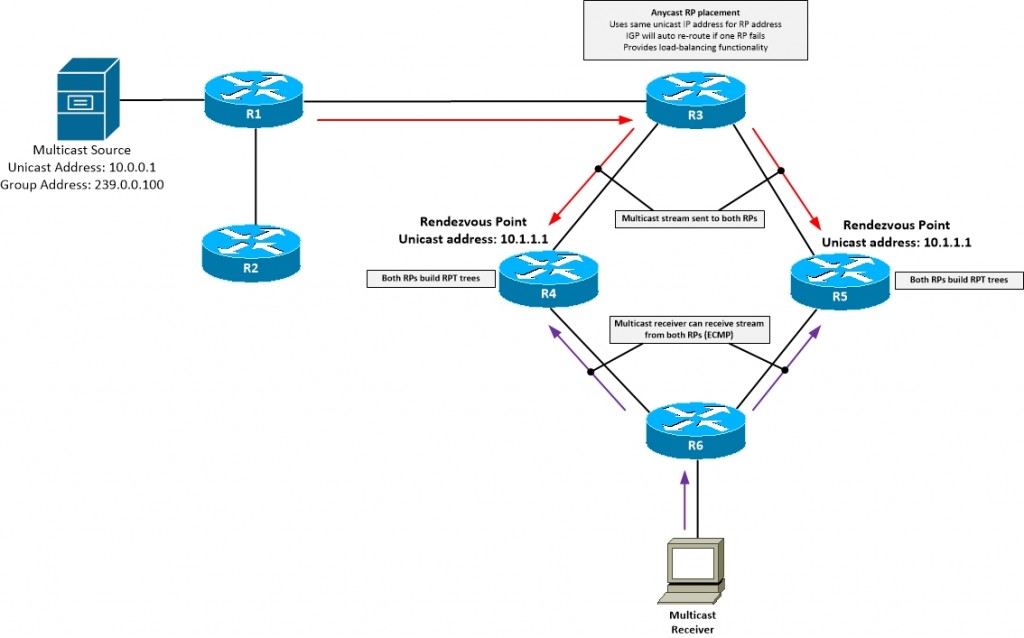

Anycast and Multicast Source Discovery Protocol (MSDP)

Anycast and Multicast Source Discovery Protocol

- Anycast is having multiple destinations share the same address

- Route to closest one based on IGP/EGP table metrics

- Can be used as a method to load-balance

- No application awareness

- Example

- RFC3258 – Distributing Authoritative Name Servers via Shared Unicast Addresses

- Assign device the same IP and advertise it in IGP

- Location in physical topology will dictate which host you go to

- If single device fails, routing will converge and find next nearest device

- Uses anycast load-balancing to de-centralise the placement of the PIM-SM RPs

- If one RP fails, IGP will route to next closest

- As long as one RP is available, new trees can be built

- RP failure doesn't necessarily effect current trees

- Anycast RP design issues:

- All RPs must share the same information about senders and receivers

- What if PIM Register message is sent to one Anycast RP and PIM Join sent to another

- 1 RP knows about sender

- 1 RP knows about receiver

- Tree can be built if single RP doesn't know about sender and receiver

- Multicast Source Discovery Protocol used (MSDP) to solve these issues

- Anycast Caveats

- Ensure control plane protocols don't use Anycast address as identifier

- e.g. prevents duplicate Router IDs

- Requires unique address to sync the multicast streams

- Application hosted on Anycast address

- Application data sync between Anycast members needs to be routable and globally unique

[_/su_spoiler]

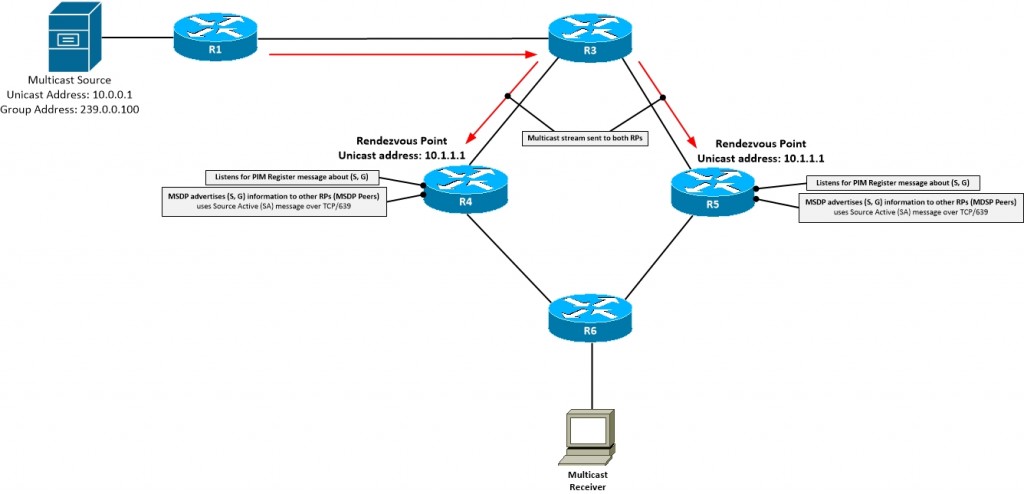

Multicast Source Discovery Protocol (MSDP)

- RFC3618

- Transport TCP/639

- Used to advertise (Source,Group) between RPs

- Allows PIM domains to use independent RPs

- Originally designed for Inter-AS Multicast

- MSDP is enabled globally

- Commands:

- (config)#ip msdp peer <MSDP peer unique IP> connect-source <Local MSDP source IF>

- (config)#ip msdp originator-ID <Local MSPD source IF> (RPF checked address)

[_/su_spoiler]

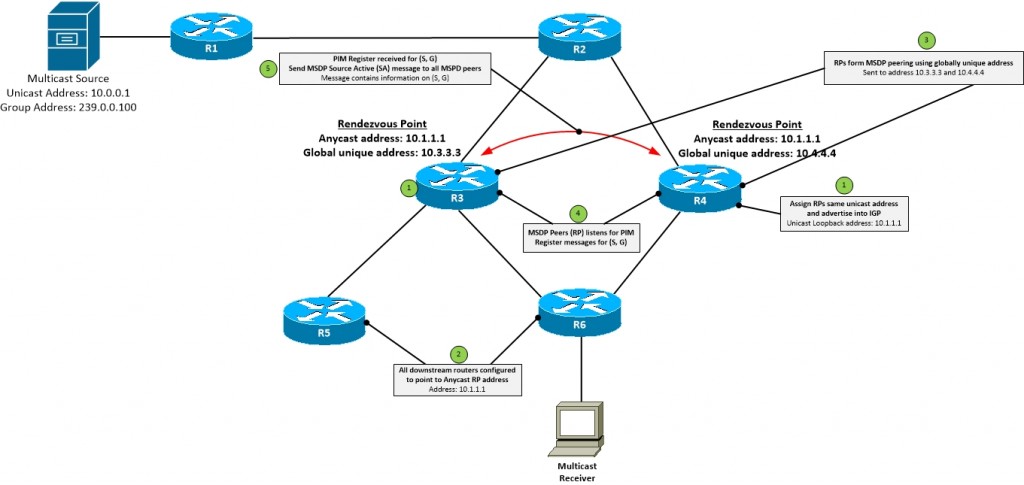

- 1. Anycast RPs assign the same Loopback addresses and advertise them in to IGP

- 2. All routers point to Anycast address as RP using static or dynamic configuration

- 3. Anycast RPs form MSDP peering using unique address

- Requires a globally routable Loopback address as well as the Anycast Loopback address

- 4. RPs listen for PIM Register messages

- 5. When Register message received, MSDP Source Active (SA) message sent to MSDP peers

- SA message contains:

- (Source, Group) information

- Originator ID of the RP

[_/su_spoiler]

Multicast BGP(MP-BGP)

Multicast BGP (MP-BGP)

- Inter-AS Multicast uses MSDP and PIM

- MSDP to advertise sources in addition to PIM

- PIM to build the tree and do the RPF check

- To run multicast over the Internet, must be enabled on all hops

- What if the RPF check for a multicast source is via a unicast only peer?

- Multicast BGP solves this by separating unicast RPF and multicast RPF

- Replaces ip mroute statement

- Multicast BGP advertises source networks for purpose of RPF check

- Multicast BGP perferred over unicast protocols for multicast RPF check

- Dynamic version of static multicast route

- Doesn’t require a separate routing protocol, uses BGP extensions

- RFC 4760 – Multiprotocol Extensions for BGP 4

- BGP peers negotiate Multicast AF during capabilities exchange

- Peers advertise NLRI of sources (not receivers) under Multicast AF

- When multicast traffic is received, MBGP learned routes are preferred over unicast

- Configuration:

- (config)#router bgp <AS>

- (config-router)#address-family ipv4 multicast

- (config-router-af)#neighbor <mcast neighbor> activate

- (config-router-af)#network <unicast prefix> mask <subnet mask>

Troubleshooting Multicast

Troubleshooting Multicast

#show ip mroute – Displays multicast routing table

#show ip mfib – Displays multicast forwarding table

#show ip pim neighbors – Displays PIM local neighbor adjacencies

#show ip mroute count – Shows if packets are being dropped because of rpf failure

#show ip mfib count – Shows if packets are being dropped because of rpf failure

#show ip pim tunnel

#show ip pim rp

#show ip pim rp mapping – all potential RP and the groups they are servicing

#show ip pim neighbors – need to check both directions as its a one way process

#show ip pim interface – Displays PIM enabled interfaces

#show ip rpf <prefix> – Displays RPF information for individual prefixes

#show ip igmp groups – Displays IGMP group membership information

#show ip igmp membership – Displays IGMP membership information to forward

#debug ip pim

#debug ip pim bsr

#debug ip mfib pak – Debug Multicast MFIB packet forwarding

#show ip pim rp mapping

#show ip msdp peers

#debug ip msdp detail

#show ip msdp sa-cache

#show bgp ipv4 multicast

#mtrace – Multicast trace